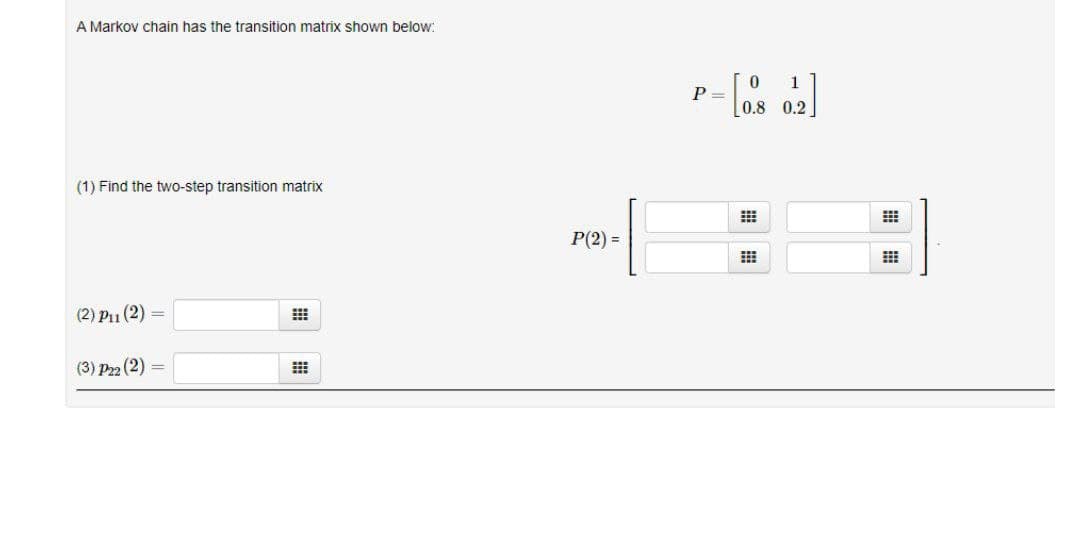

0,8 0.2] A Markov chain has the transition matrix shown below: 1 P = (1) Find the two-step transition matrix P(2) = (2) P1 (2) (3) Pz2 (2)

Q: 5. Identify a type: ordinal, nominal, discrete or continuous? On = 1, Off = 0 с. Age а. b. political…

A: Nominal data is used to label variables without any order or quantitative value Ordinal data is…

Q: In response to concerns about nutritional contents of fast foods, McDonald's has announced that it…

A:

Q: If probability events A and B are independent what can we be sure of? P(ANB) = P(A) P (B) P(A) +…

A: From the provided information, There are two events A and B which are independent.

Q: A bowl contains 3 red chips and 4 white chips. Three chips are drawn from the bowl. What is the…

A: Given Information: There are 3 red chips and 4 white chips. Three chips are drawn from the bowl. The…

Q: 39. How many ways can 12 students be seated around a circular table?

A: We need to find, in how many ways can 12 students be seated around a circular table.

Q: Suppose that 11% of all steel shafts produced by a certain process are nonconforming but can be…

A: Given that n=200,p=0.11

Q: Do it completely and typewritten for an upvote

A: GIVEN: the word STATISTICS then no of permutations can be made from the word STATISTICS ?

Q: . A nationwide survey of college seniors by the University of Michigan revealed that almost 70%…

A: Given that n =12,p=0.7 a. find the p( 7<=x<9) =? b. find the p(x<=5) =? c. find the p(…

Q: Do it completely and typewritten for an upvote.

A:

Q: Use the given frequency distribution table, estimate the following: Class boundaries Frequency (f)…

A: Data given Class Frequency 11 - 16 3 16 - 21 5 21 - 26 7 26 - 31 9

Q: Jse the confidence interval to find the estimated margin of error. Then find the sample mean. A…

A: Solution: The given confidence interval is ( 3.0, 3.6) Lower limit= 3.0 Upper limit= 3.6

Q: A manufacturing machine has a 10% defect rate. If 3 items are chosen at random, what is the…

A: 1) It is given that p=0.10 and n=3.

Q: Solve the following problems involving permutations and combinations. Show your solution. 1. In how…

A: "Since you have posted a question with multiple subparts, we will solve first 3 sub-parts for you.…

Q: A person applies for a certain job, and the probability that he or she is qualified is 0.285. The…

A:

Q: Consumer Reports uses a survey of readers to obtain customer satisfaction ratings for the nation's…

A: There are two independent samples which are Publix and Trader Joe’s. We have to test whether there…

Q: 3. The time between arrivals of taxis at a busy intersection is exponentially distributed with a…

A:

Q: An advertisment claims that 639% of customers are satisfied with a certain bank, What is the…

A: Given that n=490 , p=63.9%=0.639 , q=1-p=1-0.639=0.261

Q: If x-y? (5), then p(0.554 < x < 15.09) (using z? table) 0.980 0.950 0.05

A: Given that X~χ2(5) Df=5 Find P(0.554<X<15.09)

Q: A box contains 5 green, 7 yellow, and 10 orange ping pong balls. How many ways can 5 balls be…

A: It is given that Number of green balls = 5 Number of yellow balls = 7 Number of orange balls = 10…

Q: Use a normal approximation to find the probability of the indicated number of voters. In this case,…

A: It is given that n is 153 and p=0.22.

Q: It is commonly believed that the mean body temperature of a healthy adult is 98.6° F. You are not…

A: As per our guidelines we are supposed to answer only 3 subpart of any questions so i am solving…

Q: A person applies for a certain job, and the probability that he or she is qualified is 0.225. The…

A: From the provided information, The probability that he or she is qualified is 0.225, P (Q) = 0.225…

Q: 3. What is C(7,1)? А. 1 В. 7 С. 14 D. 49 4. What is the combination of 8 objects taken 3 at a time?…

A: As per guideline expert have to answer first question only since I have done two answer for you dear…

Q: 2. A bag contains six identical balls: two reds,3 blues and one yellow. Three balls are drawn from…

A: Given: A bag contains six identical balls: 2 reds, 3 blues and 1 yellow. 3 balls are drawn from this…

Q: uppose you are playing a game where you bet $5 and have a 48% chance of winning. The bet is 1:1.…

A: Given that Suppose you are playing a game where you bet $5 Chance of winning = 48% P(win) = 0.48…

Q: Assume that females have pulse rates that are normally distributed with a mean of µ = 75.0 beats per…

A: Given that : μ = 75.0 ? = 12.5 n = 4 By using standard normal distribution we solve this problem.

Q: 6. The life of a semiconductor laser at a constant power is normally distributed with a mean of 7000…

A: GivenMean(μ)=7000standard deviation(σ)=600

Q: onstruct the confidence interval for the population mean u. c= 0.95, x= 15.9, o = 5.0, and n= 100…

A: Given that Sample size n =100 Sample mean =15.9 Population standard deviation=5

Q: 1. In how many ways can 8 members of Board of Directors be chosen from 5 juniors and 7 seniors if…

A: Let us suppose there are three marbles viz. a red, a green and a blue. From these, there are three…

Q: A discrete probability distribution is given by the table below. 123 4 5 Pr(X=x) aa2a0.20.4 The…

A:

Q: A factory produces bars of chocolate that follow a Normal Distribution with a mean weight of 60g and…

A: Given Population mean μ=60, standard deviations σ=2 X be the factory produce bar chocolate…

Q: Height (in meter) Learner Consider the heights of 5 learners. Suppose you are interested in…

A: Since you have posted a question with multiple subparts, we will solve first three subparts for you.…

Q: find the probability of being dealt a hand of four cards from a standard deck that contains all four…

A: find the probability of being dealt a hand of four cards from a standard deck that contains all…

Q: Test the claim that the mean GPA of night students is significantly different than 3.1 at the 0.2…

A:

Q: Assignment: find out the probability where the values of favorable and unfavorable are equal…

A: Given information: The values of favourable and unfavourable are equal.

Q: Contingency Tables and Conditional Probability: Homework and Passing a Course A teacher categorized…

A: Solution

Q: Which of the following statements are true about random sampling: O four tosses of an unbiased coin…

A: Random sampling: A simple random sample of size n is collected from a large population where each…

Q: expected profit of 1 percent of the amount it would have to pay out upon death. Find the annual…

A: here take C = premium expected profit = 1% of 200000 = php 2000 p(die during the year ) = 0.02 x=…

Q: a) Determine the cumulative distribution function of flange thickness. b) Determine the proportion…

A: here thickness of flange is uniformly distributed between 0.95 and 1.05 mm a = 0.95 mm b = 1.05 mm

Q: From the following data: 24, 109, 16, 128, 46, 86, 57, 37 The median

A:

Q: udents enrolled in each degree program. Classify the sampling method. a. simple random b. stratified…

A: Given that ; A university polled of it's students = 500 The proportional to the number of students…

Q: A quality-control inspector has ten assembly lines from which to choose products for testing. Each…

A: A quality-control inspector has ten assembly lines from which to choose products for testing. Each…

Q: The population of a country follows a normal distribution with mean height 175 cm and standard…

A:

Q: OBABILITY | SM025 Every year two teams, Unggul and Bestari meet each other in a debate competition.…

A:

Q: Pineapples 6. P (Bananas only) в. 5/31 7. P (Mangoes U Pineapples) A. 5/21 C. 10/61 D. 10/34 A. 9/62…

A: We have given that a group of 62 students were surveyed, and it was found that each of students…

Q: Find the mean and standard deviation interpretation

A: * SOLUTION : - * Given that,

Q: 28. A box contains 5 green, 7 yellow, and 10 orange ping pong balls. How many ways can 5 balls be…

A: As per our guidelines we are suppose to answer three sub parts. Given,no.of green balls=5no.of…

Q: Cost of Pizzas A pizza shop owner wishes to find the 95% confidence interval of the true mean cost…

A:

Q: An experiment was conducted to compare a teacher-developed curriculum, that was standards-based,…

A:

Q: 3. The breaking strength of yarn used in manufacturing drapery material is required to be at least…

A:

Step by step

Solved in 2 steps with 2 images

- Explain how you can determine the steady state matrix X of an absorbing Markov chain by inspection.Consider the Markov chain whose matrix of transition probabilities P is given in Example 7b. Show that the steady state matrix X depends on the initial state matrix X0 by finding X for each X0. X0=[0.250.250.250.25] b X0=[0.250.250.400.10] Example 7 Finding Steady State Matrices of Absorbing Markov Chains Find the steady state matrix X of each absorbing Markov chain with matrix of transition probabilities P. b.P=[0.500.200.210.300.100.400.200.11]