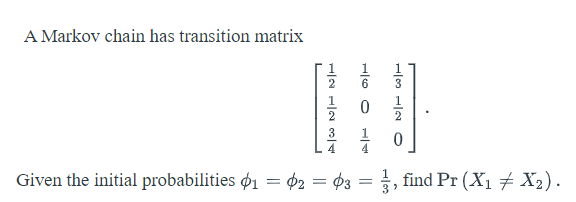

A Markov chain has transition matrix 글 0 글 3 Given the initial probabilities ø1 = $2 = $3 = , find Pr (X1 # X2). %3D

Q: The odds against a student X solving a Mathematics problem are 8:6 and the odds in favour of student...

A:

Q: I roll three different loaded dice. For the first die the probability of getting a 5 is 0.64, for th...

A:

Q: 5. The probability density function of the time to failure of an copier (in hours) is -x/1000 f(x): ...

A: Given,

Q: The number of chocolate chips in a popular brand of cookie is normally distributed with a mean of 22...

A:

Q: their "Go Business" project are normally distributed with a mean of P4,250 and a standard deviation ...

A: Given : The weekly sales of ABM students on their "Go Business" project are normally distributed wit...

Q: Question 9 Suppose the commulative distribution function of the random variable X is given by 0. if ...

A:

Q: )A gasket to be drawn has type B defect under the condition that it has a type A defect. A gasket to...

A: Two events A and B are defined as A : a gasket has type A defects. B : a gasket has type B defects T...

Q: An experiment consists of rolling a pair of fair cubic dice and recording the sum of their outcomes....

A: Given that - An experiment consists of rolling a pair of fair cubic dice and recording the sum of th...

Q: Of 20 rats in a cage, 12 are males and 9 are infected with a virus that causes hemorrhagic fever. Of...

A:

Q: An airline normally serve a meal on a long flight. If there are two meal options, what is the likeli...

A: Let n be the sample size = 6 Let p be the probability of selecting meal options = 12≈0.5 Let X denot...

Q: Find the probabilities for each using the standard normal distribution. P( z> 0.56)

A: We have to find P(z > 0.56)

Q: When women turn 40, their physicians typically remind them that it is time to undergo mammography sc...

A: According to Bartleby guildlines we have to solve first three subparts and rest can be reposted... G...

Q: The percentage chance that the test will be positive = The probability that, given a positive r...

A: It is a problem of conditional probability.

Q: Suppose that 56 % of students play football, 53 % of students play cricket, and 12 % of students pla...

A: Let F=students play footballC=students play cricketGiven,P(F)=0.56P(C)=0.53P(F¯∩C¯)=0.12

Q: A company will hire 7 men and 4 women. In how many ways can the company choose from 9 men and 6 wome...

A:

Q: Find the probability that: (i) A leap year has 53 Sundays. (ii) A leap year has 53 Sundays or Monday...

A:

Q: 6. The probability density function of the length of a hinge for fastening a door is f(x) = 1.25 for...

A: The probability density function provides the probability at each possible value of a continuous ran...

Q: Question 1 In a shipment of 50 parts, the company accidentally included 7 defective parts. If an ins...

A:

Q: 6. Jordan needs to determine the value of 1.75+ (루리) A. Use the number line provided below to create...

A: We are given a number line which contains set of integers. We have to obtain the direction of moving...

Q: An automobile manufacturing plant produced 33 vehicles today: 15 were motorcycles, 5 were trucks, an...

A:

Q: Three students from a 13 student class will be selected to attend a meeting. 5 of the students are f...

A:

Q: A bag contains 9 Cherry Starbursts and 21 other flavored Starburts. 12 Starbursts are chosen randoml...

A: Given,no.of cherry starbursts=9no.of other flavored starburts=21Total no.of starburts=30

Q: A certain event is one that will always happen. Its probability is _____. The sum of the probabilit...

A: Farmula used P(E)+P(Ec)=1

Q: Suppose that 2 balls are randomly selected from an urn containing 3 red, 4 blue, and 5 white balls. ...

A: Given: 3 red, 4 blue, and 5 white balls X= number of red balls Y= number of white balls n = 2

Q: Suppose E and F are events from a sample space S. What can you conclude from p(E|F)=0?

A:

Q: After rolling a fair twenty-sided die 200 times, the observed (or experimental) probability of rolli...

A: A 20-sided fair die is rolled.

Q: The probability of a successful optical alignment in the assembly of an optical data storage product...

A: Given,P=0.8q=1-0.8=0.2A random variable X~Geometric distribution(p=0.8)P(X=x)=0.80.2X-1 ; x=1,2...

Q: The life time of an electrical part follows an exponential distribution with mean of 5 years. Calcul...

A:

Q: Research was conducted on the amount of oxygen runners could utilize during training, known as their...

A: Given data is VO2 Max(ml/kg/min)(x) 5k Finishing Time(min)(y) 16.28 34.66 20.55 37.41 18.6...

Q: A clinical trial is being conducted in order to determine the efficacy of a new drug used to treat R...

A: From the provided information, Margin of error (E) = 3.0 mg/dL Standard deviation (σ1) = 8 mg/dL and...

Q: Suppose that 61% of all college seniors have a job prior to graduation. If a random sample of 75 col...

A: Given,n=75p=0.61mean(μ)=npmean(μ)=75×0.61=45.75standard deviation(σ)=np(1-p)standard deviation(σ)=75...

Q: Question: How many different groups of seven balls can be drawn from a barrel containing balls numbe...

A:

Q: Assume that human body temperatures are normally distributed with a mean of 98.23°F and a standard d...

A:

Q: Two students are working as part-time proofreaders for a local newspaper. Based on previous aptitude...

A:

Q: Using all 1991 birth records in the computerized national birth certificate registry compiled by the...

A: Given,mean(μ)=3432standard deviation(σ)=482

Q: Data were collected from a survey given to graduating college seniors majors. From that data, a prob...

A:

Q: Three cards are drawn in succession, without replacement, from an ordinary deck of cards. Find the p...

A: Given: A be the event that the first card is a black jack card. B be the event that the second card ...

Q: 4 6 7 8. 10 An ordinary (fair) coin is tossed 3 times. Outcomes are thus triples of "heads" (h) and ...

A:

Q: 11-15 1- 2 3 4 P(x) 0.11 0.21 0.22 0.39 0.07 16-20. 2 P'(x) 0. 12 0.42 0.36 0.09

A:

Q: Activity: Answer the following and illustrate each under the normal curve: 1. Compute the probabilit...

A: 1)GivenThe probability area to the left of z=-1.25

Q: Time Ispods (pill bugs)Temperature(warm) Average(Roaches Temperature(warm) Average(Ispods (pill bugs...

A: The data given is as follows Time Average (Isposds) warm Average (Roaches temperature) warm Aver...

Q: If two gene pairs A and a and B and b are assorting independently with A dominant to a and B dominan...

A: Since you have posted a question with multiple subparts, we will solve first three subparts for you....

Q: Consider independent events A and B. If P(A) = 0.2 and P(B) = 0.4, determine P(A N B). 0.60 0.80 0.0...

A:

Q: Nine hundred and thirty-two businessmen accepted the Mayaman Challenge of one of the Philanthropic F...

A: GivenMean(μ)=135standard deviation(σ)=15No.of businessmen accepted challenge = 932

Q: ) What are the mass points of X? b) What are the mass points of Y? c)Solve for P(X=2, Y=2) d) Sol...

A: If X and Y are two discrete random variables the probability distribution for their simultaneous occ...

Q: CNNBC recently reported that the mean annual cost of auto insurance is 1026 dollars. Assume the stan...

A: Let X be the random variable from bell-shaped (normal) distribution with mean (μ) = 1026, standard d...

Q: You are dealt one card from a standard 52-card deck. Find the probability of being dealt a heart. Th...

A:

Q: A bag contains several marbles, 15 of which are blue, 11 are yellow, and 8 are green, Answer the fol...

A:

Q: . Establish the formula (nj' +n-1)-'(x – 00)? = nx² + no06 – n10}, where n1 = no +n and 01 = (no00 +...

A: @solution:::

Q: Assuming no sequencing errors, two DNA sequences TGAC and CATGA can be assembled into the original s...

A: Given Assuming no sequencing errors, two DNA sequences TGAC and CATGA can be assembled into the orig...

Step by step

Solved in 2 steps

- Explain how you can determine the steady state matrix X of an absorbing Markov chain by inspection.Consider a continuous time Markov chain with three states {0, 1, 2} and transitions rates as follows: q01 = 3, q12 = 5, q21 = 6, q10 = 4 and the remaining rates are zeros. Find the limiting probabilities for the chain.Consider the problem of sending a binary message, 0 or 1, through a signal channelconsisting of several stages, where transmission through each stage is subject to a fixedprobability of error α. Suppose that X0 = 0 is the signal that is sent and let Xn, be thesignal that is received at the nth stage. Assume that {Xn} is a Markov chain with transitionprobabilities, Poo = P11 = 1- α and P01 = P10 = α, where 0 < α < 1.(a) Determine P {Xo = 0, X1 = 0, X2 = 0}, the probability that no error α occurs up tostage n = 2.(b) Determine the probability that a correct signal is received at stage 2.

- Consider the following stochastic system. Let Xn be the price of a certain stock (rounded to the nearest cent) at the time that the stock market closes on the n-th day starting today. Would it be appropriate to model this system as a Discrete-time Markov Chain?8. List the Gauss–Markov conditions required for applying a t & F-tests.Find the vector W of stable probabilities for the Markov chain whose transition matrix appears below P = 0.6 0.4 0.3 0.7 w = [ , ] ?