This is a coding question. Now that you have worked out the gradient descent and the update rules. Try to progrum a Ridge regression. Please complete the coding. Note that here the data sct we use has just one explanatory variable and the Ridge regression we try to create here has just one variable (or feature). Now that you have finished the program. What are the observations and the corresponding predictions using Ridge? Now, make a plot to showoase how well your model predicts against the observations. Use scatter plot for observations, line plot for your model predictions. Observations are in color red. and prodictions are in color green. Add appropriate labeis to the x axis and y axis and a title to the plot You may also need to fine tune hyperparameters such as Icurning rate and the number ofliterations.

This is a coding question. Now that you have worked out the gradient descent and the update rules. Try to progrum a Ridge regression. Please complete the coding. Note that here the data sct we use has just one explanatory variable and the Ridge regression we try to create here has just one variable (or feature). Now that you have finished the program. What are the observations and the corresponding predictions using Ridge? Now, make a plot to showoase how well your model predicts against the observations. Use scatter plot for observations, line plot for your model predictions. Observations are in color red. and prodictions are in color green. Add appropriate labeis to the x axis and y axis and a title to the plot You may also need to fine tune hyperparameters such as Icurning rate and the number ofliterations.

Related questions

Question

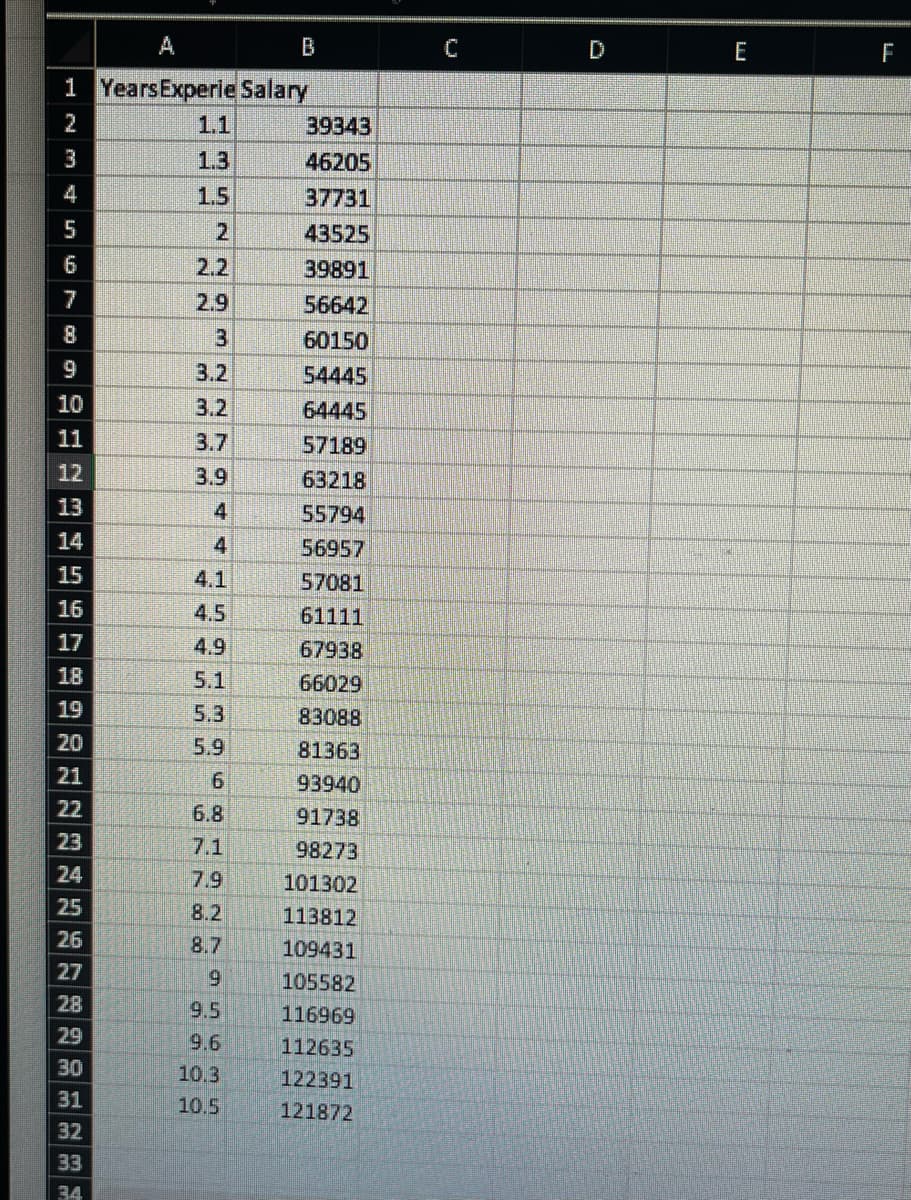

This is a coding question. Now that you have worked out the gradient descent and the update rules. Try to progrum a Ridge regression. Please complete the coding. Note that here the data sct we use has just one explanatory variable and the Ridge regression we try to create here has just one variable (or feature).

Now that you have finished the program. What are the observations and the corresponding predictions using Ridge? Now, make a plot to showoase how well your model predicts against the observations. Use scatter plot for observations, line plot for your model predictions. Observations are in color red. and prodictions are in color green. Add appropriate labeis to the x axis and y axis and a title to the plot You may also need to fine tune hyperparameters such as Icurning rate and the number ofliterations.

![import numpy as np.

import pandas as pd

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as pit

Ridge Regression

class RidgeRegression ():

definit__(self, learning_rate, iterations, 12_penality) :

self.learning_rate learning_rate

self.iterations iterations

self.12_penality 12_penality

Function for model training.

def fit( self, X, Y) 1

#no_of_training examples, no_of_features

self.m, self.n X.shape

weight initialization

self. W np.zeros(self.n)

self.b 0

self.x = x

self. Y Y

gradient descent learning

for i in range ( self.iterations ) :

self.update_weights ()

return self

Helper function to update weights in gradient descent

def update_weights (self):

#you need to figure this out.

return self

Hypothetical function h( x )

def predict( self, X ) :

return X.dot ( self.W) + self.b

#Driver code

def main () 1

Importing dataset

df pd. read_cav( "salary_data.csv")

X df.iloc[:, :-1].values

Ydf.iloc[:, 1].values

#Splitting dataset into train and test set

X_train, x_test, Y_train, Y test train_test_split( X, Y,

Model training

model Ridge Regression ( iterations 1000,

model.fit( X_train, Y_train )

test_size=1/3, random_state = 0)

learning_rate= 0.01, 12 penality=1)

Prediction on test set

Y pred model.predict( x_test )

print("Predicted values ", np.round( Y_pred [:3], 2))

print("Real values ", Y_test [:3] )

Visualization on test set

if_name__ "_main_" :

main ()](/v2/_next/image?url=https%3A%2F%2Fcontent.bartleby.com%2Fqna-images%2Fquestion%2Fc963c9fb-4f92-45b3-8c44-4d6f750b4af1%2F1774a761-8086-415b-be0d-79dac9098709%2Fwisqbhm_processed.jpeg&w=3840&q=75)

Transcribed Image Text:import numpy as np.

import pandas as pd

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as pit

Ridge Regression

class RidgeRegression ():

definit__(self, learning_rate, iterations, 12_penality) :

self.learning_rate learning_rate

self.iterations iterations

self.12_penality 12_penality

Function for model training.

def fit( self, X, Y) 1

#no_of_training examples, no_of_features

self.m, self.n X.shape

weight initialization

self. W np.zeros(self.n)

self.b 0

self.x = x

self. Y Y

gradient descent learning

for i in range ( self.iterations ) :

self.update_weights ()

return self

Helper function to update weights in gradient descent

def update_weights (self):

#you need to figure this out.

return self

Hypothetical function h( x )

def predict( self, X ) :

return X.dot ( self.W) + self.b

#Driver code

def main () 1

Importing dataset

df pd. read_cav( "salary_data.csv")

X df.iloc[:, :-1].values

Ydf.iloc[:, 1].values

#Splitting dataset into train and test set

X_train, x_test, Y_train, Y test train_test_split( X, Y,

Model training

model Ridge Regression ( iterations 1000,

model.fit( X_train, Y_train )

test_size=1/3, random_state = 0)

learning_rate= 0.01, 12 penality=1)

Prediction on test set

Y pred model.predict( x_test )

print("Predicted values ", np.round( Y_pred [:3], 2))

print("Real values ", Y_test [:3] )

Visualization on test set

if_name__ "_main_" :

main ()

Transcribed Image Text:A

1 Years Experie Salary

2

1.1

3

1.3

4

1.5

5

2

6

2.2

7

29

日

gonne 4 5 6 7 8 9 20122824 25 25 27 28 29 30 31 2 33 弘

10

11

12

13

15

16

17

18

19

23

26

32

3.

3.2

3.7

3.9

4

4

4.1

4.5

4.9

5.1

5.3

5.9

6

6.8

7.1

7.9

8.2

8.7

9

9.5

9.6

10.3

10.5

39343

46205

37731

43525

39891

56642

60150

54445

64445

57189

63218

55794

56957

57081

61111

67938

66029

83088

81363

93940

91738

98273

101302

113812

109431

105582

116969

112635

122391

121872

E

F

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

Step by step

Solved in 4 steps with 3 images