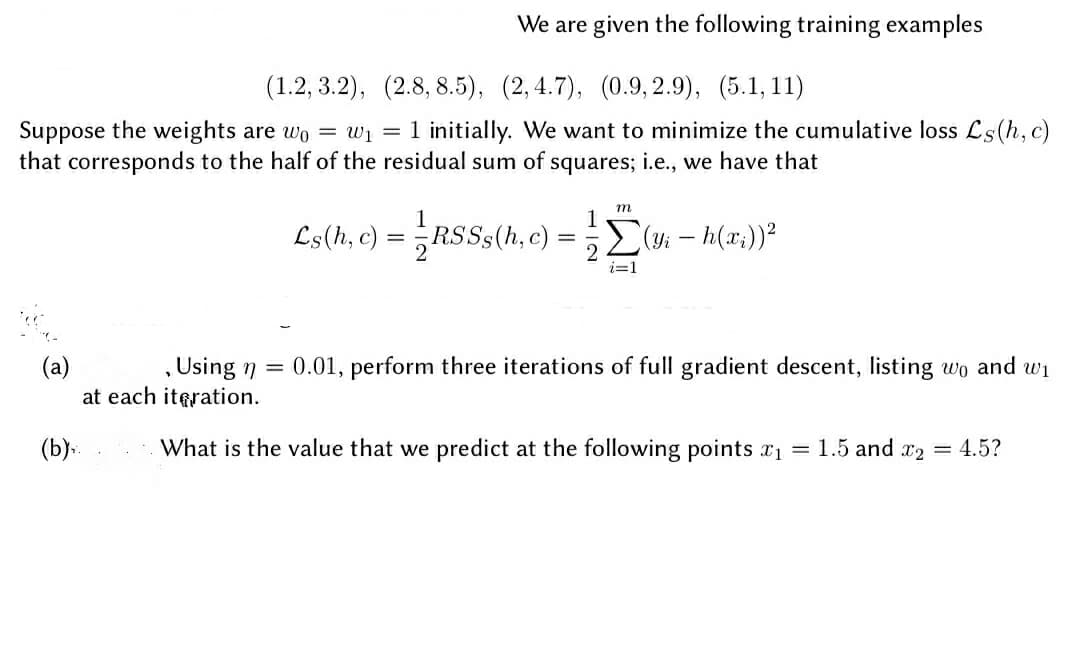

We are given the following training examples (1.2, 3.2), (2.8, 8.5), (2,4.7), (0.9, 2.9), (5.1,11) Suppose the weights are wo = wi = 1 initially. We want to minimize the cumulative loss Ls(h, c) that corresponds to the half of the residual sum of squares; i.e., we have that m 1 Ls(h, c) = RSSs(h, c) E(yi – h(x;))? i=1 0.01, perform three iterations of full gradient descent, listing wo and wi (a) at each itgration. , Using 7 = (b). What is the value that we predict at the following points r1 = 1.5 and r2 = 4.5?

We are given the following training examples (1.2, 3.2), (2.8, 8.5), (2,4.7), (0.9, 2.9), (5.1,11) Suppose the weights are wo = wi = 1 initially. We want to minimize the cumulative loss Ls(h, c) that corresponds to the half of the residual sum of squares; i.e., we have that m 1 Ls(h, c) = RSSs(h, c) E(yi – h(x;))? i=1 0.01, perform three iterations of full gradient descent, listing wo and wi (a) at each itgration. , Using 7 = (b). What is the value that we predict at the following points r1 = 1.5 and r2 = 4.5?

Glencoe Algebra 1, Student Edition, 9780079039897, 0079039898, 2018

18th Edition

ISBN:9780079039897

Author:Carter

Publisher:Carter

Chapter10: Statistics

Section10.1: Measures Of Center

Problem 9PPS

Related questions

Question

100%

Transcribed Image Text:We are given the following training examples

(1.2, 3.2), (2.8, 8.5), (2,4.7), (0.9, 2.9), (5.1, 11)

Suppose the weights are wo = wi

that corresponds to the half of the residual sum of squares; i.e., we have that

= 1 initially. We want to minimize the cumulative loss Ls(h, c)

m

1

RSSS(h, c)

1

Ls(h, c) =

E(yi – h(x;))?

i=1

, Using n = 0.01, perform three iterations of full gradient descent, listing wo and wi

(a)

at each iteration.

(b).

What is the value that we predict at the following points x1 = 1.5 and x2

4.5?

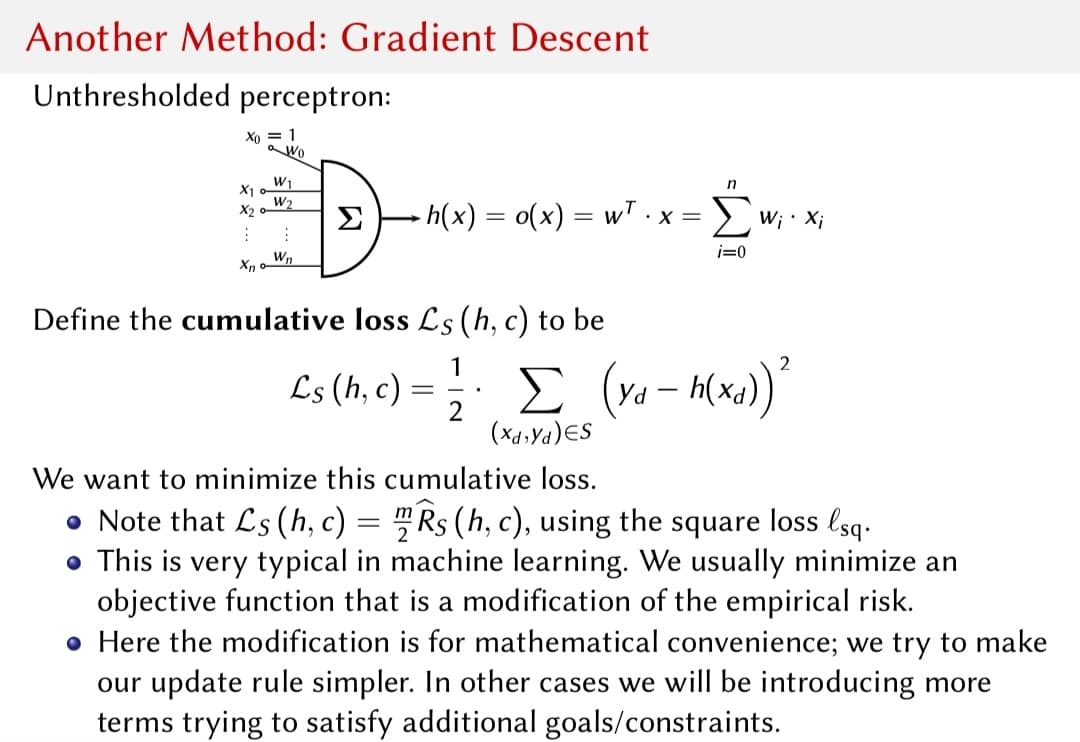

Transcribed Image Text:Another Method: Gradient Descent

Unthresholded perceptron:

Xo = 1

a Wo

W1

X1

W2

n

h(x) = o(x) = wT.x= ) w; · x;

X2

Σ

i=0

Wn

Define the cumulative loss Ls (h, c) to be

1

Ls (h, c) =

Yd

(X4.Ya)ES

We want to minimize this cumulative loss.

• Note that Ls (h, c) = "Rs (h, c), using the square loss lsq.

• This is very typical in machine learning. We usually minimize an

objective function that is a modification of the empirical risk.

• Here the modification is for mathematical convenience; we try to make

our update rule simpler. In other cases we will be introducing more

terms trying to satisfy additional goals/constraints.

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

This is a popular solution!

Trending now

This is a popular solution!

Step by step

Solved in 2 steps with 2 images

Recommended textbooks for you

Glencoe Algebra 1, Student Edition, 9780079039897…

Algebra

ISBN:

9780079039897

Author:

Carter

Publisher:

McGraw Hill

Glencoe Algebra 1, Student Edition, 9780079039897…

Algebra

ISBN:

9780079039897

Author:

Carter

Publisher:

McGraw Hill