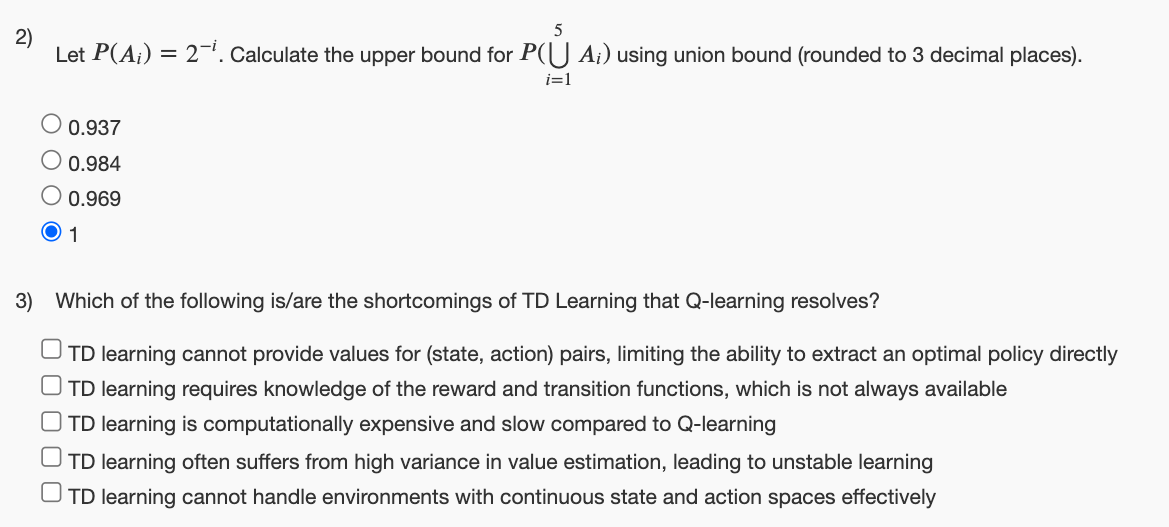

2) 5 Let P(A) = 2. Calculate the upper bound for P(U A;) using union bound (rounded to 3 decimal places). O 0.937 0.984 ○ 0.969 1 i=1 3) Which of the following is/are the shortcomings of TD Learning that Q-learning resolves? UTD learning cannot provide values for (state, action) pairs, limiting the ability to extract an optimal policy directly ☐ TD learning requires knowledge of the reward and transition functions, which is not always available ☐ TD learning is computationally expensive and slow compared to Q-learning TD learning often suffers from high variance in value estimation, leading to unstable learning TD learning cannot handle environments with continuous state and action spaces effectively

2) 5 Let P(A) = 2. Calculate the upper bound for P(U A;) using union bound (rounded to 3 decimal places). O 0.937 0.984 ○ 0.969 1 i=1 3) Which of the following is/are the shortcomings of TD Learning that Q-learning resolves? UTD learning cannot provide values for (state, action) pairs, limiting the ability to extract an optimal policy directly ☐ TD learning requires knowledge of the reward and transition functions, which is not always available ☐ TD learning is computationally expensive and slow compared to Q-learning TD learning often suffers from high variance in value estimation, leading to unstable learning TD learning cannot handle environments with continuous state and action spaces effectively

Operations Research : Applications and Algorithms

4th Edition

ISBN:9780534380588

Author:Wayne L. Winston

Publisher:Wayne L. Winston

Chapter19: Probabilistic Dynamic Programming

Section: Chapter Questions

Problem 4RP

Question

Alert dont submit

refref Image and solve all 2 question.

explain all option right answer and wrong answer.

Transcribed Image Text:2)

5

Let P(A) = 2. Calculate the upper bound for P(U A;) using union bound (rounded to 3 decimal places).

O 0.937

0.984

○ 0.969

1

i=1

3) Which of the following is/are the shortcomings of TD Learning that Q-learning resolves?

UTD learning cannot provide values for (state, action) pairs, limiting the ability to extract an optimal policy directly

☐ TD learning requires knowledge of the reward and transition functions, which is not always available

☐ TD learning is computationally expensive and slow compared to Q-learning

TD learning often suffers from high variance in value estimation, leading to unstable learning

TD learning cannot handle environments with continuous state and action spaces effectively

AI-Generated Solution

Unlock instant AI solutions

Tap the button

to generate a solution

Recommended textbooks for you

Operations Research : Applications and Algorithms

Computer Science

ISBN:

9780534380588

Author:

Wayne L. Winston

Publisher:

Brooks Cole

Operations Research : Applications and Algorithms

Computer Science

ISBN:

9780534380588

Author:

Wayne L. Winston

Publisher:

Brooks Cole