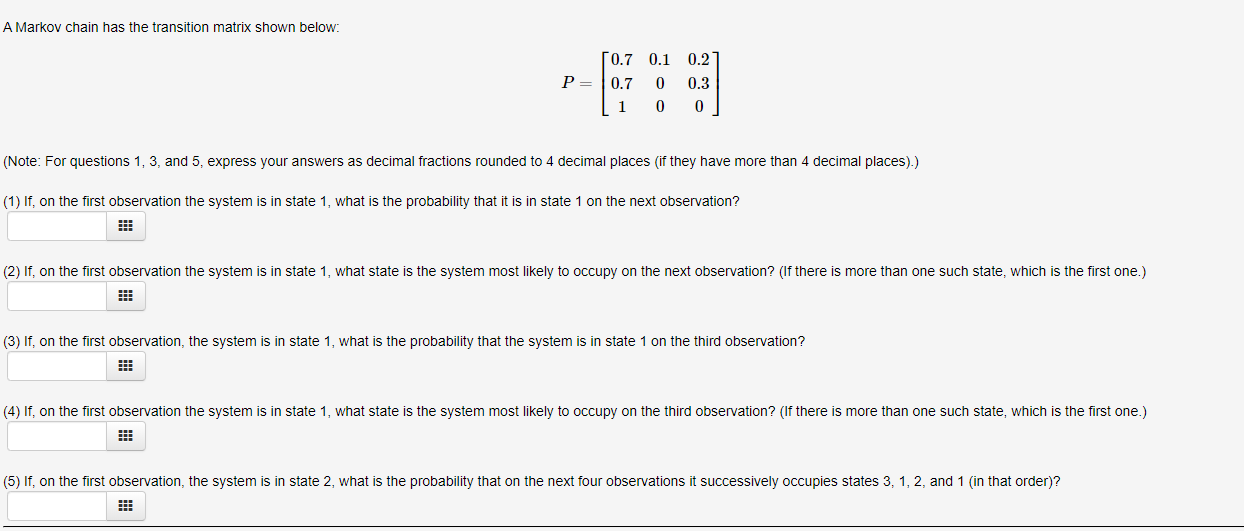

A Markov chain has the transition matrix shown below: 0.7 0.1 0.2 P = 0.7 0.3 (Note: For questions 1, 3, and 5, express your answers as decimal fractions rounded to 4 decimal places (if they have more than 4 decimal places).) (1) If, on the first observation the system is in state 1, what is the probability that it is in state 1 on the next observation? (2) If, on the first observation the system is in state 1, what state is the system most likely to occupy on the next observation? (If there is more than one such state, which is the first one.) (3) If, on the first observation, the system is in state 1, what is the probability that the system is in state 1 on the third observation? (4) If, on the first observation the system is in state 1, what state is the system most likely to occupy on the third observation? (If there is more than one such state, which is the first one.) (5) If, on the first observation, the system is in state 2, what is the probability that on the next four observations it successively occupies states 3, 1, 2, and 1 (in that order)?

A Markov chain has the transition matrix shown below: 0.7 0.1 0.2 P = 0.7 0.3 (Note: For questions 1, 3, and 5, express your answers as decimal fractions rounded to 4 decimal places (if they have more than 4 decimal places).) (1) If, on the first observation the system is in state 1, what is the probability that it is in state 1 on the next observation? (2) If, on the first observation the system is in state 1, what state is the system most likely to occupy on the next observation? (If there is more than one such state, which is the first one.) (3) If, on the first observation, the system is in state 1, what is the probability that the system is in state 1 on the third observation? (4) If, on the first observation the system is in state 1, what state is the system most likely to occupy on the third observation? (If there is more than one such state, which is the first one.) (5) If, on the first observation, the system is in state 2, what is the probability that on the next four observations it successively occupies states 3, 1, 2, and 1 (in that order)?

Elementary Linear Algebra (MindTap Course List)

8th Edition

ISBN:9781305658004

Author:Ron Larson

Publisher:Ron Larson

Chapter2: Matrices

Section2.5: Markov Chain

Problem 49E: Consider the Markov chain whose matrix of transition probabilities P is given in Example 7b. Show...

Related questions

Question

Transcribed Image Text:A Markov chain has the transition matrix shown below:

0.7 0.1

0.2

P =

0.7

0.3

(Note: For questions 1, 3, and 5, express your answers as decimal fractions rounded to 4 decimal places (if they have more than 4 decimal places).)

(1) If, on the first observation the system is in state 1, what is the probability that it is in state 1 on the next observation?

(2) If, on the first observation the system is in state 1, what state is the system most likely to occupy on the next observation? (If there is more than one such state, which is the first one.)

(3) If, on the first observation, the system is in state 1, what is the probability that the system is in state 1 on the third observation?

(4) If, on the first observation the system is in state 1, what state is the system most likely to occupy on the third observation? (If there is more than one such state, which is the first one.)

(5) If, on the first observation, the system is in state 2, what is the probability that on the next four observations it successively occupies states 3, 1, 2, and 1 (in that order)?

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

This is a popular solution!

Trending now

This is a popular solution!

Step by step

Solved in 4 steps with 3 images

Recommended textbooks for you

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:

9781305658004

Author:

Ron Larson

Publisher:

Cengage Learning

Linear Algebra: A Modern Introduction

Algebra

ISBN:

9781285463247

Author:

David Poole

Publisher:

Cengage Learning

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:

9781305658004

Author:

Ron Larson

Publisher:

Cengage Learning

Linear Algebra: A Modern Introduction

Algebra

ISBN:

9781285463247

Author:

David Poole

Publisher:

Cengage Learning