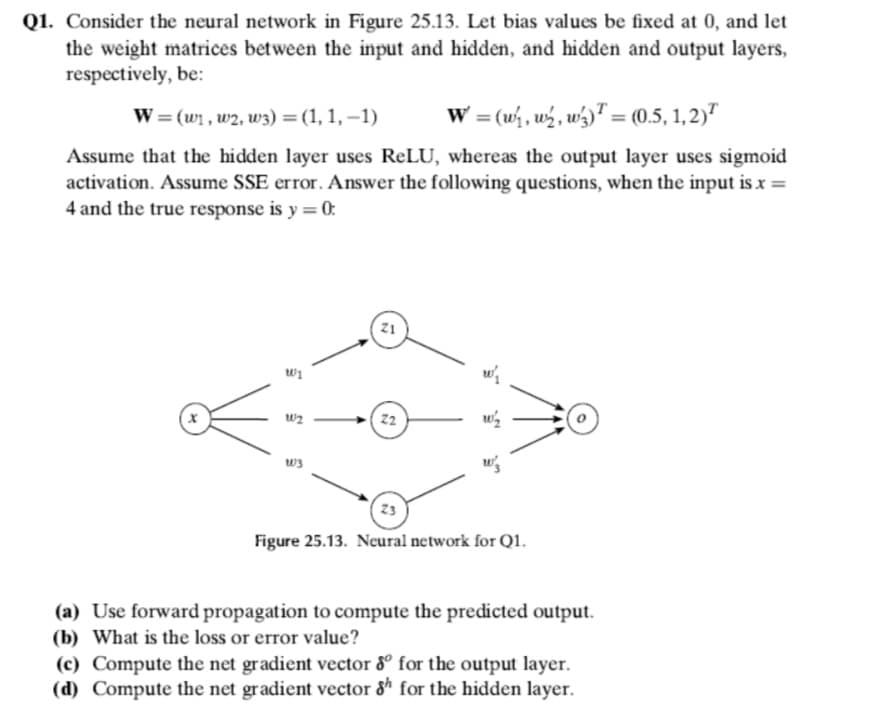

Q1. Consider the neural network in Figure 25.13. Let bias values be fixed at 0, and let the weight matrices between the input and hidden, and hidden and output layers, respectively, be: W = (w1, w2, w3) = (1, 1, –1) W = (w , w̟, wɔ" = (0.5, 1,2)" %3D Assume that the hidden layer uses ReLU, whereas the output layer uses sigmoid activation. Assume SSE error. Answer the following questions, when the input is x = 4 and the true response is y =0: 22 ws 23 Figure 25.13. Neural network for Q1. (a) Use forward propagation to compute the predicted output. (b) What is the loss or error value? (c) Compute the net gradient vector 8º for the output layer. (d) Compute the net gradient vector 8 for the hidden layer.

Q1. Consider the neural network in Figure 25.13. Let bias values be fixed at 0, and let the weight matrices between the input and hidden, and hidden and output layers, respectively, be: W = (w1, w2, w3) = (1, 1, –1) W = (w , w̟, wɔ" = (0.5, 1,2)" %3D Assume that the hidden layer uses ReLU, whereas the output layer uses sigmoid activation. Assume SSE error. Answer the following questions, when the input is x = 4 and the true response is y =0: 22 ws 23 Figure 25.13. Neural network for Q1. (a) Use forward propagation to compute the predicted output. (b) What is the loss or error value? (c) Compute the net gradient vector 8º for the output layer. (d) Compute the net gradient vector 8 for the hidden layer.

Operations Research : Applications and Algorithms

4th Edition

ISBN:9780534380588

Author:Wayne L. Winston

Publisher:Wayne L. Winston

Chapter20: Queuing Theory

Section20.10: Exponential Queues In Series And Open Queuing Networks

Problem 6P

Related questions

Question

100%

Transcribed Image Text:Q1. Consider the neural network in Figure 25.13. Let bias values be fixed at 0, and let

the weight matrices between the input and hidden, and hidden and output layers,

respectively, be:

W = (w1, w2, w3) = (1, 1, –1)

W = (w , uý, w½)" = (0.5, 1,2)"

Assume that the hidden layer uses RELU, whereas the output layer uses sigmoid

activation. Assume SSE error. Answer the following questions, when the input is x =

4 and the true response is y = 0:

z1

wi

w2

22

w3

23

Figure 25.13. Neural network for Q1.

(a) Use forward propagation to compute the predicted output.

(b) What is the loss or error value?

(c) Compute the net gradient vector 8º for the output layer.

(d) Compute the net gradient vector & for the hidden layer.

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

Step by step

Solved in 2 steps

Knowledge Booster

Learn more about

Need a deep-dive on the concept behind this application? Look no further. Learn more about this topic, computer-science and related others by exploring similar questions and additional content below.Recommended textbooks for you

Operations Research : Applications and Algorithms

Computer Science

ISBN:

9780534380588

Author:

Wayne L. Winston

Publisher:

Brooks Cole

Operations Research : Applications and Algorithms

Computer Science

ISBN:

9780534380588

Author:

Wayne L. Winston

Publisher:

Brooks Cole