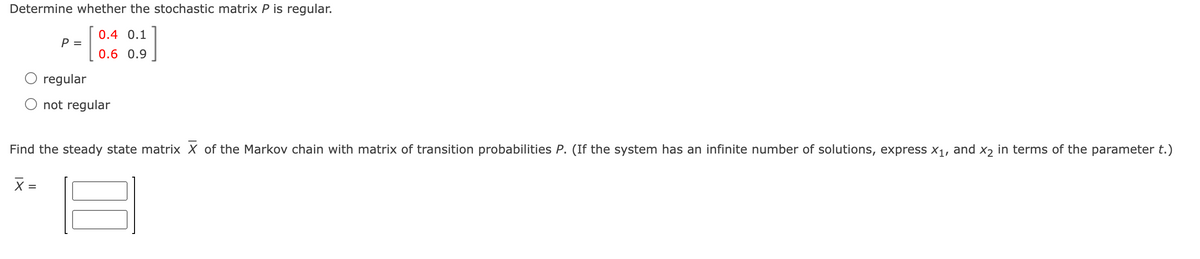

Determine whether the stochastic matrix P is regular. 0.4 0.1 P = 0.6 0.9 O regular O not regular Find the steady state matrix X of the Markov chain with matrix of transition probabilities P. (If the system has an infinite number of solutions, express x1, and x2 in terms of the parameter t.) X =

Determine whether the stochastic matrix P is regular. 0.4 0.1 P = 0.6 0.9 O regular O not regular Find the steady state matrix X of the Markov chain with matrix of transition probabilities P. (If the system has an infinite number of solutions, express x1, and x2 in terms of the parameter t.) X =

Elementary Linear Algebra (MindTap Course List)

8th Edition

ISBN:9781305658004

Author:Ron Larson

Publisher:Ron Larson

Chapter2: Matrices

Section2.5: Markov Chain

Problem 47E: Explain how you can determine the steady state matrix X of an absorbing Markov chain by inspection.

Related questions

Question

A. Determine whether the stochastic matrix P is regular.

B. Find the steady state matrix X of the Markov chain with matrix of transition probabilities P. (If the system has an infinite number of solutions, express x1, and x2 in terms of the parameter t.)

|

X =?

|

Transcribed Image Text:Determine whether the stochastic matrix P is regular.

0.4 0.1

P =

0.6 0.9

regular

not regular

Find the steady state matrix X of the Markov chain with matrix of transition probabilities P. (If the system has an infinite number of solutions, express x1, and x2 in terms of the parameter t.)

X =

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

This is a popular solution!

Trending now

This is a popular solution!

Step by step

Solved in 2 steps with 2 images

Recommended textbooks for you

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:

9781305658004

Author:

Ron Larson

Publisher:

Cengage Learning

Linear Algebra: A Modern Introduction

Algebra

ISBN:

9781285463247

Author:

David Poole

Publisher:

Cengage Learning

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:

9781305658004

Author:

Ron Larson

Publisher:

Cengage Learning

Linear Algebra: A Modern Introduction

Algebra

ISBN:

9781285463247

Author:

David Poole

Publisher:

Cengage Learning