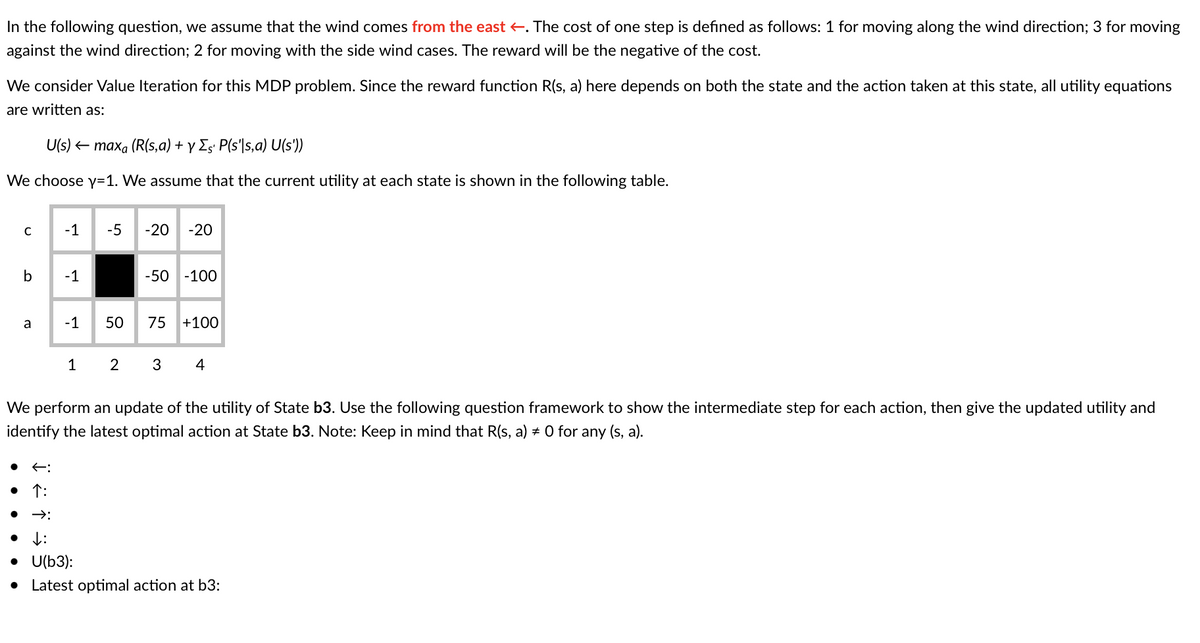

In the following question, we assume that the wind comes from the east . The cost of one step is defined as follows: 1 for moving along the wind direction; 3 for moving against the wind direction; 2 for moving with the side wind cases. The reward will be the negative of the cost. We consider Value Iteration for this MDP problem. Since the reward function R(s, a) here depends on both the state and the action taken at this state, all utility equations are written as: U(s) – maxa (R(s,a) + y Es' P(s"ls,a) U(s')) We choose y=1. We assume that the current utility at each state is shown in the following table. -1 -5 -20 -20 b -1 -50 -100 a -1 50 75 +100 1 2 3 4 We perform an update of the utility of State b3. Use the following question framework to show the intermediate step for each action, then give the updated utility and identify the latest optimal action at State b3. Note: Keep in mind that R(s, a) = 0 for any (s, a). • T: : • U(b3): • Latest optimal action at b3:

In the following question, we assume that the wind comes from the east . The cost of one step is defined as follows: 1 for moving along the wind direction; 3 for moving against the wind direction; 2 for moving with the side wind cases. The reward will be the negative of the cost. We consider Value Iteration for this MDP problem. Since the reward function R(s, a) here depends on both the state and the action taken at this state, all utility equations are written as: U(s) – maxa (R(s,a) + y Es' P(s"ls,a) U(s')) We choose y=1. We assume that the current utility at each state is shown in the following table. -1 -5 -20 -20 b -1 -50 -100 a -1 50 75 +100 1 2 3 4 We perform an update of the utility of State b3. Use the following question framework to show the intermediate step for each action, then give the updated utility and identify the latest optimal action at State b3. Note: Keep in mind that R(s, a) = 0 for any (s, a). • T: : • U(b3): • Latest optimal action at b3:

Operations Research : Applications and Algorithms

4th Edition

ISBN:9780534380588

Author:Wayne L. Winston

Publisher:Wayne L. Winston

Chapter20: Queuing Theory

Section20.4: The M/m/1/gd/∞/∞ Queuing System And The Queuing Formula L = Λw

Problem 10P

Related questions

Question

6

Transcribed Image Text:In the following question, we assume that the wind comes from the eastE. The cost of one step is defined as follows: 1 for moving along the wind direction; 3 for moving

against the wind direction; 2 for moving with the side wind cases. The reward will be the negative of the cost.

We consider Value Iteration for this MDP problem. Since the reward function R(s, a) here depends on both the state and the action taken at this state, all utility equations

are written as:

U(s) + maxa (R(s,a) + y Es' P(s'ls,a) U(s'))

We choose y=1. We assume that the current utility at each state is shown in the following table.

-1

-5

-20

-20

b

-1

-50 -100

a

-1

50

75

+100

1

2

4

We perform an update of the utility of State b3. Use the following question framework to show the intermediate step for each action, then give the updated utility and

identify the latest optimal action at State b3. Note: Keep in mind that R(s, a) + 0 for any (s, a).

• 1:

• >:

• U(b3):

Latest optimal action at b3:

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

Step by step

Solved in 3 steps with 1 images

Knowledge Booster

Learn more about

Need a deep-dive on the concept behind this application? Look no further. Learn more about this topic, computer-science and related others by exploring similar questions and additional content below.Recommended textbooks for you

Operations Research : Applications and Algorithms

Computer Science

ISBN:

9780534380588

Author:

Wayne L. Winston

Publisher:

Brooks Cole

Operations Research : Applications and Algorithms

Computer Science

ISBN:

9780534380588

Author:

Wayne L. Winston

Publisher:

Brooks Cole