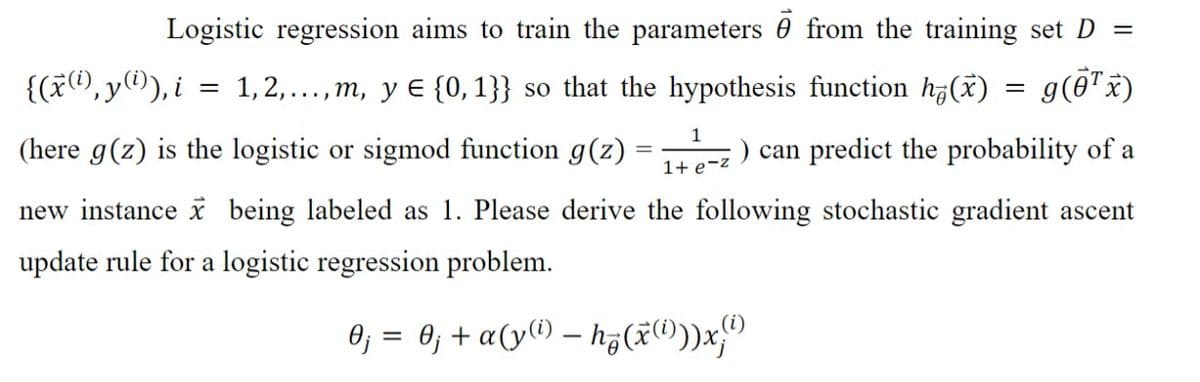

Logistic regression aims to train the parameters from the training set D = {(x(¹), y(¹)), i = 1,2,...,m, y = {0, 1}} so that the hypothesis function h(x) = g(0¹ x) 1 1+ e-z new instance x being labeled as 1. Please derive the following stochastic gradient ascent update rule for a logistic regression problem. 0₁ = 0; + α(y(¹) — hz ( x (¹))) x (D) (here g(z) is the logistic or sigmod function g(z) = ) can predict the probability of a

Logistic regression aims to train the parameters from the training set D = {(x(¹), y(¹)), i = 1,2,...,m, y = {0, 1}} so that the hypothesis function h(x) = g(0¹ x) 1 1+ e-z new instance x being labeled as 1. Please derive the following stochastic gradient ascent update rule for a logistic regression problem. 0₁ = 0; + α(y(¹) — hz ( x (¹))) x (D) (here g(z) is the logistic or sigmod function g(z) = ) can predict the probability of a

Linear Algebra: A Modern Introduction

4th Edition

ISBN:9781285463247

Author:David Poole

Publisher:David Poole

Chapter7: Distance And Approximation

Section7.3: Least Squares Approximation

Problem 32EQ

Related questions

Question

Transcribed Image Text:Logistic regression aims to train the parameters from the training set D =

g(0¹ x)

{(x(i),y(¹)), i = 1, 2,...,m, y € {0, 1}} so that the hypothesis function h(x)

1

(here g(z) is the logistic or sigmod function g(z)

1+ e-z

new instance x being labeled as 1. Please derive the following stochastic gradient ascent

update rule for a logistic regression problem.

0₁ = 0₁ + α(y(¹) - h₂(x)))x)

=

) can predict the probability of a

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

This is a popular solution!

Trending now

This is a popular solution!

Step by step

Solved in 3 steps with 2 images

Recommended textbooks for you

Linear Algebra: A Modern Introduction

Algebra

ISBN:

9781285463247

Author:

David Poole

Publisher:

Cengage Learning

Algebra & Trigonometry with Analytic Geometry

Algebra

ISBN:

9781133382119

Author:

Swokowski

Publisher:

Cengage

Linear Algebra: A Modern Introduction

Algebra

ISBN:

9781285463247

Author:

David Poole

Publisher:

Cengage Learning

Algebra & Trigonometry with Analytic Geometry

Algebra

ISBN:

9781133382119

Author:

Swokowski

Publisher:

Cengage