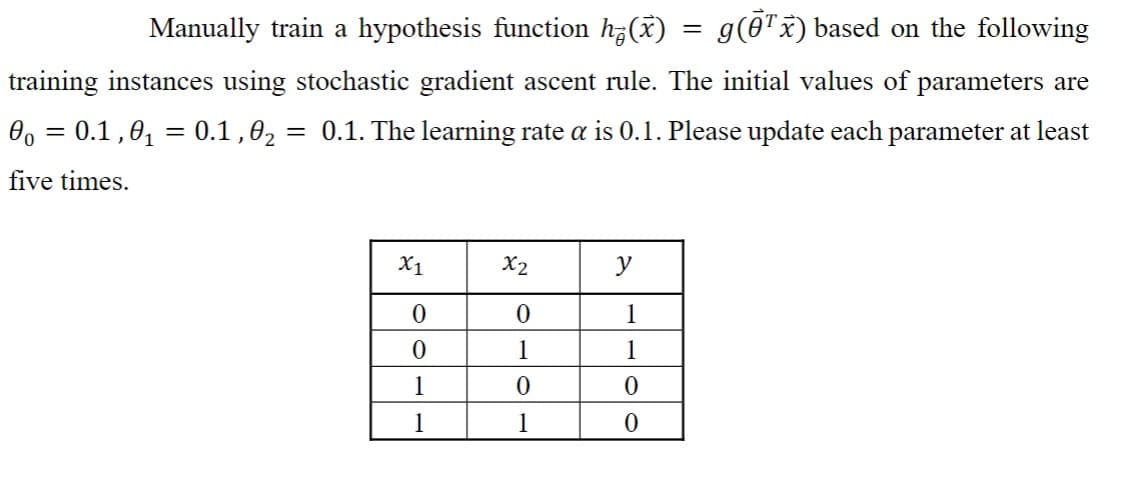

Manually train a hypothesis function h(x) = g(Ō¹x) based on the following training instances using stochastic gradient ascent rule. The initial values of parameters are 0o = 0.1,0₁ = 0.1,0₂ = 0.1. The learning rate a is 0.1. Please update each parameter at least five times. X1 0 0 1 1 X2 0 1 0 1 y 1 1 0 0

Manually train a hypothesis function h(x) = g(Ō¹x) based on the following training instances using stochastic gradient ascent rule. The initial values of parameters are 0o = 0.1,0₁ = 0.1,0₂ = 0.1. The learning rate a is 0.1. Please update each parameter at least five times. X1 0 0 1 1 X2 0 1 0 1 y 1 1 0 0

Operations Research : Applications and Algorithms

4th Edition

ISBN:9780534380588

Author:Wayne L. Winston

Publisher:Wayne L. Winston

Chapter21: Simulation

Section21.5: Simulations With Continuous Random Variables

Problem 6P

Related questions

Question

Please write this out by hand and not by code. I would be so grateful.

Transcribed Image Text:Manually train a hypothesis function h(x) = g(Ō¹x) based on the following

training instances using stochastic gradient ascent rule. The initial values of parameters are

0o = 0.1,0₁ = 0.1,0₂ = 0.1. The learning rate a is 0.1. Please update each parameter at least

five times.

X1

0

0

1

1

X2

0

1

0

1

y

1

1

0

0

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

Step by step

Solved in 2 steps

Knowledge Booster

Learn more about

Need a deep-dive on the concept behind this application? Look no further. Learn more about this topic, computer-science and related others by exploring similar questions and additional content below.Recommended textbooks for you

Operations Research : Applications and Algorithms

Computer Science

ISBN:

9780534380588

Author:

Wayne L. Winston

Publisher:

Brooks Cole

Operations Research : Applications and Algorithms

Computer Science

ISBN:

9780534380588

Author:

Wayne L. Winston

Publisher:

Brooks Cole