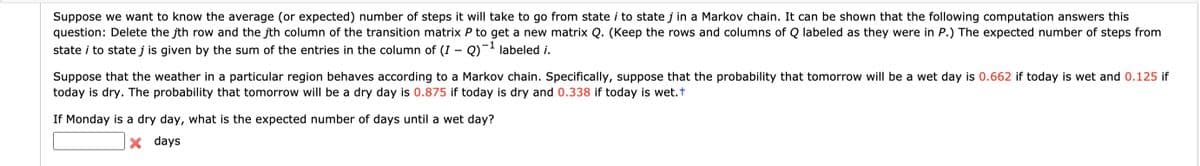

Suppose we want to know the average (or expected) number of steps it will take to go from state i to state j in a Markov chain. It can be shown that the following computation answers this question: Delete the jth row and the jth column of the transition matrix P to get a new matrix Q. (Keep the rows and columns of Q labeled as they were in P.) The expected number of steps from state i to state j is given by the sum of the entries in the column of (I - Q)-1 labeled i. Suppose that the weather in a particular region behaves according to a Markov chain. Specifically, suppose that the probability that tomorrow will be a wet day is 0.662 if today is wet and 0.125 if today is dry. The probability that tomorrow will be a dry day is 0.875 if today is dry and 0.338 if today is wet.t If Monday is a dry day, what is the expected number of days until a wet day?

Suppose we want to know the average (or expected) number of steps it will take to go from state i to state j in a Markov chain. It can be shown that the following computation answers this question: Delete the jth row and the jth column of the transition matrix P to get a new matrix Q. (Keep the rows and columns of Q labeled as they were in P.) The expected number of steps from state i to state j is given by the sum of the entries in the column of (I - Q)-1 labeled i. Suppose that the weather in a particular region behaves according to a Markov chain. Specifically, suppose that the probability that tomorrow will be a wet day is 0.662 if today is wet and 0.125 if today is dry. The probability that tomorrow will be a dry day is 0.875 if today is dry and 0.338 if today is wet.t If Monday is a dry day, what is the expected number of days until a wet day?

Linear Algebra: A Modern Introduction

4th Edition

ISBN:9781285463247

Author:David Poole

Publisher:David Poole

Chapter3: Matrices

Section3.7: Applications

Problem 15EQ

Related questions

Question

Transcribed Image Text:Suppose we want to know the average (or expected) number of steps it will take to go from state i to state j in a Markov chain. It can be shown that the following computation answers this

question: Delete the jth row and the jth column of the transition matrix P to get a new matrix Q. (Keep the rows and columns of Q labeled as they were in P.) The expected number of steps from

state i to state j is given by the sum of the entries in the column of (I – Q)¯- labeled i.

-1

Suppose that the weather in a particular region behaves according to a Markov chain. Specifically, suppose that the probability that tomorrow will be a wet day is 0.662 if today is wet and 0.125 if

today is dry. The probability that tomorrow will be a dry day is 0.875 if today is dry and 0.338 if today is wet.t

If Monday is a dry day, what is the expected number of days until a wet day?

days

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

This is a popular solution!

Trending now

This is a popular solution!

Step by step

Solved in 2 steps with 2 images

Knowledge Booster

Learn more about

Need a deep-dive on the concept behind this application? Look no further. Learn more about this topic, probability and related others by exploring similar questions and additional content below.Recommended textbooks for you

Linear Algebra: A Modern Introduction

Algebra

ISBN:

9781285463247

Author:

David Poole

Publisher:

Cengage Learning

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:

9781305658004

Author:

Ron Larson

Publisher:

Cengage Learning

Linear Algebra: A Modern Introduction

Algebra

ISBN:

9781285463247

Author:

David Poole

Publisher:

Cengage Learning

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:

9781305658004

Author:

Ron Larson

Publisher:

Cengage Learning