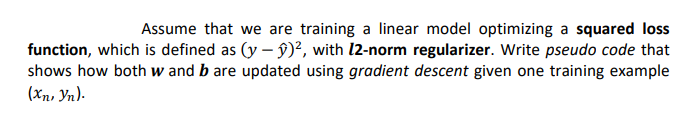

Assume that we are training a linear model optimizing a squared loss function, which is defined as (y – )², with l2-norm regularizer. Write pseudo code that shows how both w and b are updated using gradient descent given one training example (Xn, Yn).

Q: Can you give an example of cost-benefit analysis for a management system software?

A: Cost-benefit analysis software is a computer program or suite that assists personnel in the complex ...

Q: Following the formatting of a hard disk drive, the filesystem must be utilized by all partitions on ...

A: Introduction Following the formatting of a hard disk drive, the filesystem must be utilized by all p...

Q: Assuming E = {a, b}, construct an optimized string matching automaton to determine all occurrences o...

A: Pattern searching is an important problem in computer science. When we do search for a string in not...

Q: Subject : Data Structure (C language) Explain the concept of binary search trees and the operations...

A:

Q: The term "reduced" refers to a computer that operates with a limited set of instructions. What does ...

A: The term "reduced" in that phrase was intended to describe the fact that the amount of work any sing...

Q: Write a program that generates 100 random integers between 100 and 1000 inclusive and displays the n...

A: Program: #include <iostream>using namespace std;int main() { srand((unsigned) time(NULL)); ...

Q: Determine some of the difficulties that will be involved in designing an information system architec...

A: Introduction: Business or organizational information systems are defined in formal terms by an infor...

Q: Name format: You must use String object and not StringBuffer or StringBuilder. Many documents use a ...

A: Step 1 : Start Step 2 : In the main method , declare the string variable to hold the name format. St...

Q: In an old house in Russia several chests of full of hollow dolls have been discovered. These dolls a...

A: ANSWER:-

Q: Assembly language instructions may be optimized by writing compilers and assemblers that rearrange t...

A: The answer is

Q: What is an operating system and how does it work?

A: Introduction: The operating system is the backbone of every computer or mobile device when it comes ...

Q: Mousing devices equipped with an optical sensor that detects mouse movements.

A:

Q: Will testing a classifier using cross-validation build a better classifier? Why?

A: Let's see the solution

Q: Explain why process, dependability, requirements management, and reuse are core software engineering...

A: INTRODUCTION: SOFTWARE SYSTEM: A software system is a collection of interconnected components based ...

Q: Have you considered the three different kinds of IPv6 migration techniques?

A: Answer for the given question is in step-2.

Q: 2. Write an 8085 program to add two 8-bit numbers A1 and CO. Then store the final result into the Ac...

A: Write an 8085 program to add two 8-bit numbers A1 and C0. Then store the final result into the Accum...

Q: Specify the architecture of a computed unified device.

A: Compute Unified Device Architecture is equally registering engineering valuable for the help of uses...

Q: true/false There is only one end state in one activity diagram ( )

A: Activity diagrams are graphical representations of workflows of stepwise activities and actions with...

Q: Hw1 • read an image 1 from hard test • display it • read an image 2 from hard disk • display it • re...

A: We will use numpy and opencv to code the program, opencv is very powerful tool used for image proces...

Q: QUESTION THREE (3) Given the grammar G = ({S}, {a, b}, S, P) with production S → aSa, S → bSb, S → ε...

A: Given production rules: S → aSa S → bSb S → ε

Q: Define the concept of a unified memory architecture.

A: The goal of unified memory is to reduce data redundancy by copying data between separate areas of me...

Q: Please write a c program where the user will type in a text at the command line and the program will...

A: There are two types of comments in C A comment that starts with a slash asterisk /* and finishes wit...

Q: Why were timers required in our rdt protocols in the first place?

A: The answer of this question is as follows:

Q: Use a paper-and-pencil approach to compute the following division in binary. i. Dividend = 1110, Div...

A: Given that use a paper and pencil approach to compute the following division in binary. Dividend = 1...

Q: Fill in the values for the empty nodes in the following game tree according to Minimax, where the ma...

A: Let's see the solution

Q: Take the two numbers from the user and try to multiply both of them using lambda function in python.

A: Name the expression as multiply It accepts two parameters and then returns multiplication of paramet...

Q: When surfing online, you get some strange data on an apparently secure website, and you realize you ...

A: Introduction: Certificate: If an organization wants to have a secure website that uses encryption, i...

Q: 1. Give a truth table that shows the (Boolean) value of each of the following Boolean expressions, f...

A: Two input values P and Q using the logic symbols NOT, AND . NOT (P AND Q) can represented as ¬ (P ∧...

Q: Chap 5 In Class Exercise • Create a Java Project called C.5EX • Create a driver program called Order...

A: Please refer below code and screenshot for your reference: import java.util.*;public class Order{pri...

Q: hat conditions would you argue in favor of building application software in assembly language code r...

A: Lets see the solution.

Q: The following image shows the “404 error” message that pops up when a web page does not upload for t...

A: 404 error is a common response from the webserver when it could not identify the requested webpage o...

Q: When to utilize implicit heap-dynamic variables, how to use them, and why to use them are all covere...

A: Introduction: The variable is tied to heap storage when a value is assigned to an IMPLICIT HEAP-DYNA...

Q: A regular polygon has n number of sides with each side of length s. The area of a regular polygon is...

A: The program is written in C++. Please check the source code and flowchart in the following steps.

Q: Is it more convenient for you to utilize a graphical user interface or a command-line interface? Why...

A: The Answer is given below step.

Q: WRITE A JAVA PROGRAM TO TAKE A LIST OF INTEGERS FROM THE USER AND PRINT THAT LIST WHERE EACH INTEGER...

A: I give the code in java along with code and output screenshot

Q: Need help creating a state chart diagram for the driving test appointment object. information can b...

A: Statechart diagram: An Statechart diagram which describes the flow of control from one state to anot...

Q: Define a Python function named expensive_counties that has two parameters. The first parameter is a ...

A: def expensive_counties(txt_file, csv_file): #creating an empty dict to store the results res...

Q: More than 90% of the microprocessors/micro- controllers manufactured is used in embedded computing

A: The answer is

Q: -For each separate user task, include a use case diagram. -For each use case, specify a use case des...

A: Use case diagram is a behavioral UML diagram type and frequently used to analyze various systems. Th...

Q: How can you avoid losing data of the database from disaster?

A: Disasters are natural or man-made can occur at any time or at any location in the global. Natural di...

Q: Question 3: If the image contains 800 x 600 pixels, with RGB coloring system, how many megabytes are...

A: Introduction

Q: A program is required to read from the screen the lenghth and width of a rectangular house block, an...

A: The Answer for the given question is start from step-2.

Q: (If code is posted, please use PYTHON). For point in polygon operations, are the advantages and dis...

A: Introduction :A polygon may be represented as a number of line segments connected, end to from a clo...

Q: 1) Registers

A: The answer is

Q: Take a list of String from the user and sort that in list in alphanumerically ascending order. The S...

A: Required:- Take a list of String from the user and sort that in the list in alphanumerically ascendi...

Q: Construct a tree the diagram to show the elements of the sample space S

A:

Q: I need help with this. unsure how to start and or complete this problem Consider the following Jo...

A: Here i explain you which choice is better: =========================================================...

Q: What if you modified the default runlevel/target of your system to reboot.target or runlevel 6?

A: The solution to the given question is: Runlevel 6 is mainly used for maintainence , it sends a warni...

Q: The SSTF disc scheduling approach has several dangers, which we'll go through below.

A: Introduction: The SSTF disc scheduling method, or Shortest Seek Time First, is a helpful and advanta...

Q: What additional components can be modified to maintain the same page size (and eliminate the need to...

A: Introduction: Recompilation is an excellent approach to rapidly get your business up to speed on man...

Step by step

Solved in 2 steps

- Suppose you are using a Linear SVM classifier with 2 class classification problem. Now you have been given the following data in which some points are circled red that are representing support vectors. a) Draw the decision boundary of linear SVM. Give a brief explanation. b) Suppose instead of SVM, we use regularized logistic regression to learn the classifier circle the points such that removing that example from the training set and running regularized logistic regression, we would get a different decision boundary than training with regularized logistic regression on the full sample . why ?Consider a logistic regression classifier that implements the 2-input OR gate. At iteration t, the parameters are given by w0=0, w1=0, w2=0. Given binary input (x1,x2), output of logistic regression is given by 1/(1+exp(-w0-w1*x1-w2*x2)). What will be value of the loss function at t? What will be the values of w0, w1 and w2 at (t+1) with learning rate ɳ=1?4 the task is to estimate two models1. Cobduglus (after taking log to convert it into log-linear)2. Estimate the linear model without log

- When performing Naive Bayes operation especially for text classification, why is there a requirement for Laplace Smoothing or Additive Smoothing? Explain with considering an example of training and the test set and show how not having additive smoothing leads to undesirable outcomes.Consider a real random variable X with zero mean and variance σ2X . Suppose that we cannot directly observe X, but instead we can observe Yt := X + Wt, t ∈ [0, T ], where T > 0 and {Wt : t ∈ R} is a WSS process with zero mean and correlation function RW , uncorrelated with X.Further suppose that we use the following linear estimator to estimate X based on {Yt : t ∈ [0, T ]}:ˆXT =Z T0h(T − θ)Yθ dθ,i.e., we pass the process {Yt} through a causal LTI filter with impulse response h and sample theoutput at time T . We wish to design h to minimize the mean-squared error of the estimate.a. Use the orthogonality principle to write down a necessary and sufficient condition for theoptimal h. (The condition involves h, T , X, {Yt : t ∈ [0, T ]}, ˆXT , etc.)b. Use part a to derive a condition involving the optimal h that has the following form: for allτ ∈ [0, T ],a =Z T0h(θ)(b + c(τ − θ)) dθ,where a and b are constants and c is some function. (You must find a, b, and c in terms ofthe…Generate 100 synthetic data points (x,y) as follows: x is uniform over [0,1]10 and y = P10 i=1 i ∗ xi + 0.1 ∗ N(0,1) where N(0,1) is the standard normal distribution. Implement full gradient descent and stochastic gradient descent, and test them on linear regression over the synthetic data points. Subject: Python Programming

- When a temperature gauge surpasses a threshold, your local nuclear power station sounds an alarm. Core temperature is gauged. Consider the Boolean variables A (alarm sounds), FA (faulty alarm), and FG (faulty gauge) and the multivalued nodes G (gauge reading) and T (real core temperature). Since the gauge is more likely to fail at high core temperatures, draw a Bayesian network for this domain.In R, write a function that produces plots of statistical power versus sample size for simple linear regression. The function should be of the form LinRegPower(N,B,A,sd,nrep), where N is a vector/list of sample sizes, B is the true slope, A is the true intercept, sd is the true standard deviation of the residuals, and nrep is the number of simulation replicates. The function should conduct simulations and then produce a plot of statistical power versus the sample sizes in N for the hypothesis test of whether the slope is different than zero. B and A can be vectors/lists of equal length. In this case, the plot should have separate lines for each pair of A and B values (A[1] with B[1], A[2] with B[2], etc). The function should produce an informative error message if A and B are not the same length. It should also give an informative error message if N only has a single value. Demonstrate your function with some sample plots. Find some cases where power varies from close to zero to near…Given a two-category classification problem under the univariate case, where there are two training sets (one for each category) as follows: D₁ = (-3,-1,0,4} D₂ = {-2,1,2,3,6,8} Given the test example x = 5, please answer the following questions: have and a) Assume that the likelihood function of each category has certain paramétric form. Specifically, we p(x | w₁) N, 07) p(x₂)~ N(μ₂, 02). Which category should we decide on when maximum-likelihood estimation is employed to make the prediction?

- give answer of all subparts in clear handwitten- For the model P + L ⇋ PL, what does each variable represent? Explain. The following questions refer to the variable θ.a. What is the definition of θ in words?b. What is the maximum value of θ? Explain your answer.Explain why linear predictor condition in predictor transformation is necessary.We are given the following training examples in 2D ((-3,5), +), ((-4, –2), +), ((2,1), -), ((4,3),–) Use +1 to map positive (+) examples and -1 to map negative (-) examples. We want to apply the learning algorithm for training a perceptron using the above data with starting weights wo = w1 = w2 = 0 and learning rate 7 = 0.1. In each iteration process the training examples in the order given above. Complete at most 3 iterations over the above training examples. What are the weights at the end of each iteration? Are these weights final