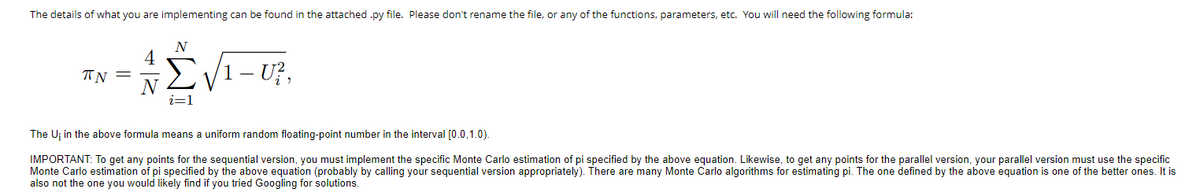

Follow the instructions in the screenshots provided carefully to implement the code below in python 3.10 or later, please. def pi_monte_carlo(n) : """Computes and returns an estimation of pi using Monte Carlo simulation. Keyword arguments: n - The number of samples. """ pass def pi_parallel_monte_carlo(n, p=4) : """Computes and returns an estimation of pi using a parallel Monte Carlo simulation. Keyword arguments: n - The total number of samples. p - The number of processes to use. """ # You can distribute the work across p # processes by having each process # call the sequential version, where # those calls divide the n samples across # the p calls. # Once those calls return, simply average # the p partial results and return that average. pass def generate_table() : """This function should generate and print a table of results to demonstrate that both versions compute increasingly accurate estimations of pi as n is increased. It should use the following values of n = {12, 24, 48, ..., 12582912}. That is, the first value of n is 12, and then each subsequent n is 2 times the previous. The reason for starting at 12 is so that n is always divisible by 1, 2, 3, and 4. The first column should be n, the second column should be the result of calling piMonteCarlo(n), and you should then have 4 more columns for the parallel version, but with 1, 2, 3, and 4 processes in the Pool.""" pass def time() : """This function should generate a table of runtimes using timeit. Use the same columns and values of n as in the generate_table() function. When you use timeit for this, pass number=1 (because the high n values will be slow).""" pass

Follow the instructions in the screenshots provided carefully to implement the code below in python 3.10 or later, please.

def pi_monte_carlo(n) :

"""Computes and returns an estimation of pi

using Monte Carlo simulation.

Keyword arguments:

n - The number of samples.

"""

pass

def pi_parallel_monte_carlo(n, p=4) :

"""Computes and returns an estimation of pi

using a parallel Monte Carlo simulation.

Keyword arguments:

n - The total number of samples.

p - The number of processes to use.

"""

# You can distribute the work across p

# processes by having each process

# call the sequential version, where

# those calls divide the n samples across

# the p calls.

# Once those calls return, simply average

# the p partial results and return that average.

pass

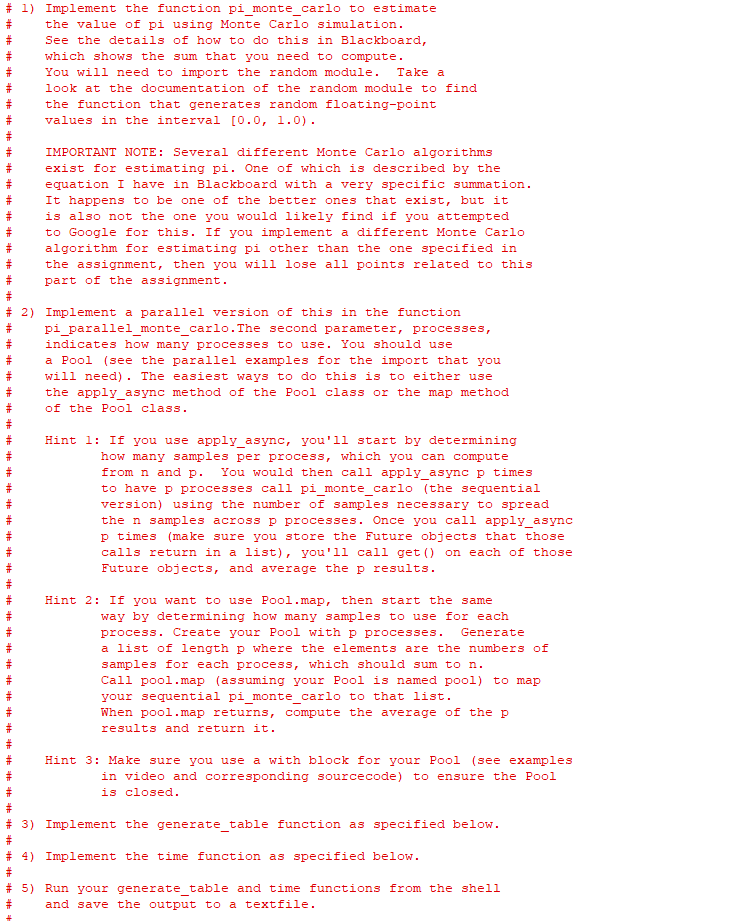

def generate_table() :

"""This function should generate and print a table

of results to demonstrate that both versions

compute increasingly accurate estimations of pi

as n is increased. It should use the following

values of n = {12, 24, 48, ..., 12582912}. That is,

the first value of n is 12, and then each subsequent

n is 2 times the previous. The reason for starting at 12

is so that n is always divisible by 1, 2, 3, and 4.

The first

column should be n, the second column should

be the result of calling piMonteCarlo(n), and you

should then have 4 more columns for the parallel

version, but with 1, 2, 3, and 4 processes in the Pool."""

pass

def time() :

"""This function should generate a table of runtimes

using timeit. Use the same columns and values of

n as in the generate_table() function. When you use timeit

for this, pass number=1 (because the high n values will be slow)."""

pass

Trending now

This is a popular solution!

Step by step

Solved in 4 steps with 2 images

How do I get this code to output the data into a text file?