Elementary Linear Algebra (MindTap Course List)

8th Edition

ISBN: 9781305658004

Author: Ron Larson

Publisher: Cengage Learning

expand_more

expand_more

format_list_bulleted

Question

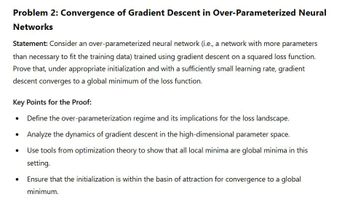

Transcribed Image Text:Problem 2: Convergence of Gradient Descent in Over-Parameterized Neural

Networks

Statement: Consider an over-parameterized neural network (i.e., a network with more parameters

than necessary to fit the training data) trained using gradient descent on a squared loss function.

Prove that, under appropriate initialization and with a sufficiently small learning rate, gradient

descent converges to a global minimum of the loss function.

Key Points for the Proof:

•

•

•

Define the over-parameterization regime and its implications for the loss landscape.

Analyze the dynamics of gradient descent in the high-dimensional parameter space.

Use tools from optimization theory to show that all local minima are global minima in this

setting.

Ensure that the initialization is within the basin of attraction for convergence to a global

minimum.

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

Step by stepSolved in 2 steps

Knowledge Booster

Similar questions

- Show that the ridge estimator is (1) biased but (2) more efficient than the ordinary least squares estimator when X is non-orthonormal but full rank. Hint: For the efficiency use SVD and some convincing arguments. Matrix inequalities is not required.arrow_forwardA certain experiment produces the data (0, 1),(−1, 2),(1, 0.5),(2, −0.5). Find values for a, b, and c which describe the model that produces a least squares fit of the points by a function of the form f(x) = ax2 + bx + c.arrow_forward(only if possible, preferably in minitab, but you can do it manually)arrow_forward

- Given a collection of data points {(xi, y₁)}₁ find the best least squares approxima- ax² + bx³. tion of the form y =arrow_forwardEx[lain the Two Stage Least Squares Estimator?arrow_forwardAn article presents the results of an experiment in which the surface roughness (in μm) was measured for 15 D2 steel specimens and compared with the roughness predicted by a neural network model. The results are presented in the following table. TRUE Value (x) Predicted Value (y) of 6 0.45 0.400 0.82 0.7 0.54 0.52 0.41 0.39 0.77 0.74 2:30:15 0.79 0.78 0.25 0.27 pped 0.62 0.6 0.91 0.87 0.52 Book 0.51 1.02 0.91 Hint 0.6 0.71 Ask 0.58 0.5 erences 0.87 0.91 1.06 1.04 ۵ To check the accuracy of the prediction method, the linear model y=Bo+B1x+ & is fit. If the prediction method is accurate, the value of Bo will be 0 and the value of ẞ1 will be 1. Note: This problem has a reduced data set for ease of performing the calculations required. This differs from the data set given for this problem in the text.arrow_forward

- 2. Let consider given points: (1,1), (3,2), (4,3), (5,6). (a) Find the best least square fit by a linear function (linear regression). Compute the sum of square errors. (b) Find a polynomial of degree 3 that interpolates the points. (c) Find the best least squares exponential fit y = piet. Compute the sum of square errors and compare with result from (a).arrow_forwardGraph the data and observe the relationship between x and y, is it linear or nonlinear? Predict which of least squares approximation P₁(x) (linear) or P₂(x) (2nd order polynomial) is better? or they are similar? (Do not need to find P₁ or P₂, no calculations are involved in this problem) Xi Yi 0 1.0 0.15 1.004 0.31 0.5 1.031 1.117 0.6 1.223 0.75 1.422arrow_forwardall 3 - true or falsearrow_forward

- Can someone please explain to me ASAP??!!arrow_forwardShow that the first least squares assumption, E(ui | Xi) = 0, implies thatE(Yi | Xi) = β0 + β1Xi.arrow_forwardTrue or false II If false, explain briefly. a) Some of the residuals from a least squares linear model will be positive and some will be negative. b) Least Squares means that some of the squares of the residuals are minimized. c) We write y n to denote the predicted values and y to denote the observed values.arrow_forward

arrow_back_ios

SEE MORE QUESTIONS

arrow_forward_ios

Recommended textbooks for you

Elementary Linear Algebra (MindTap Course List)AlgebraISBN:9781305658004Author:Ron LarsonPublisher:Cengage Learning

Elementary Linear Algebra (MindTap Course List)AlgebraISBN:9781305658004Author:Ron LarsonPublisher:Cengage Learning Linear Algebra: A Modern IntroductionAlgebraISBN:9781285463247Author:David PoolePublisher:Cengage Learning

Linear Algebra: A Modern IntroductionAlgebraISBN:9781285463247Author:David PoolePublisher:Cengage Learning

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:9781305658004

Author:Ron Larson

Publisher:Cengage Learning

Linear Algebra: A Modern Introduction

Algebra

ISBN:9781285463247

Author:David Poole

Publisher:Cengage Learning